Management research, health services research, operations research, quality and safety research, implementation research – a crowded landscape of words describing concepts that are, at best, not entirely distinct, and at worst synonyms. Some definitions are given in Table 1. Perhaps the easiest one to deal with is ‘operations research’, which has a rather narrow meaning and is used to describe mathematical modelling techniques to derive optimal solutions to complex problems typically dealing with the flow of objects (people) over time. So it is a subset of the broader genre covered by this collection of terms. Quality and safety research puts the cart before the horse by defining the intended objective of an intervention, rather than where in the system the intervention impacts. Since interventions at a system level may have many downstream effects, it seems illogical and indeed potentially harmful, to define research by its objective, an argument made in greater detail elsewhere.[1]

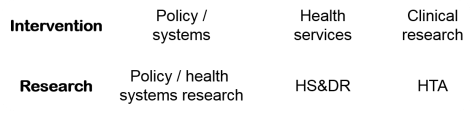

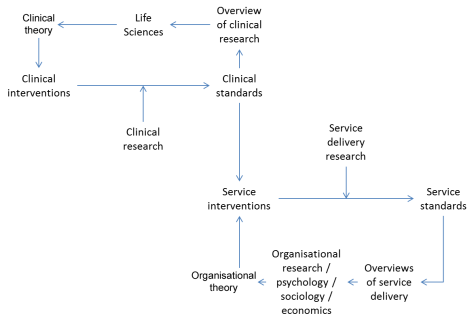

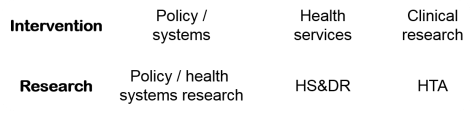

Health Services Research (HSR) can be defined as management research applied to health, and is an acceptable portmanteau term for the construct we seek to define. For those who think the term HSR leaves out the development and evaluation of interventions at service level, the term Health Services and Delivery Research (HS&DR) has been devised. We think this is a fine term to describe management research as applied to the health services, and are pleased that the NIHR has embraced the term, and now has two major funding schemes – the HTA programme dealing with clinical research, and the HS&DR dealing with management research. In general, interventions and their related research programmes can be neatly represented as shown in the framework below, represented in a modified Donabedian chain:

So what about implementation research then? Wikipedia defines implementation research as “the scientific study of barriers to and methods of promoting the systematic application of research findings in practice, including in public policy.” However, a recent paper in BMJ states that “considerable confusion persists about its terminology and scope.”[2] Surprised? In what respect does implementation research differ from HS&DR?

Let’s start with the basics:

- HS&DR studies interventions at the service level. So does implementation research.

- HS&DR aims to improve outcome of care (effectiveness / safety / access / efficiency / satisfaction / acceptability / equity). So does implementation research.

- HS&DR seeks to improve outcomes / efficiency by making sure that optimum care is implemented. So does implementation research.

- HS&DR is concerned with implementation of knowledge; first knowledge about what clinical care should be delivered in a given situation, and second about how to intervene at the service level. So does implementation research.

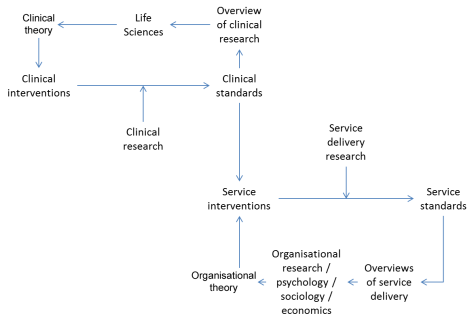

This latter concept, concerning the two types of knowledge (clinical and service delivery) that are implemented in HS&DR is a critical one. It seems poorly understood and causes many researchers in the field to ‘fall over their own feet’. The concept is represented here:

HS&DR / implementation research resides in the South East quadrant.

HS&DR / implementation research resides in the South East quadrant.

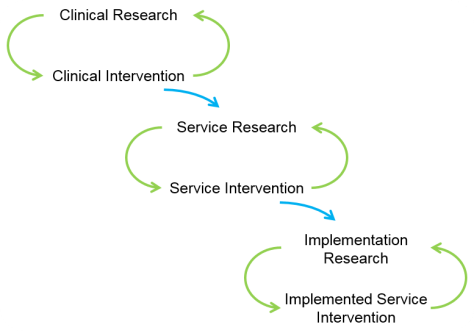

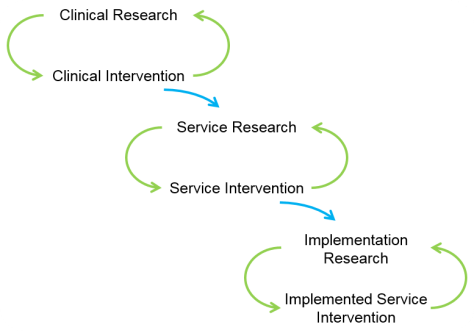

Despite all of this, some people insist on keeping the distinction between HS&DR and Implementation Research alive – as in the recent Standards for Reporting Implementation studies (StaRI) Statement.[3] The thing being implemented here may be a clinical intervention, in which case the above figure applies. Or it may be a service delivery intervention. Then they say that once it is proven, it must be implemented, and this implementation can be studied – in effect they are arguing here for a third ring:

This last, extreme South East, loop is redundant because:

- Research methods do not turn on whether the research is HS&DR or so-called Implementation Research (as the authors acknowledge). So we could end up in the odd situation of the HS&DR being a before and after study, and the Implementation Research being a cluster RCT! The so-called Implementation Research is better thought of as more HS&DR – seldom is one study sufficient.

- The HS&DR itself requires the tenets of Implementation Science to be in place – following the MRC framework, for example – and identifying barriers and facilitators. There is always implementation in any trial of evaluative research, so all HS&DR is Implementation Research – some is early and some is late.

- Replication is a central tenet of science and enables context to be explored. For example, “mother and child groups” is an intervention that was shown to be effective in Nepal. It has now been ‘implemented’ in six further sites under cluster RCT evaluation. Four of the seven studies yielded positive results, and three null results. Comparing and contrasting has yielded a plausible theory, so we have a good idea for whom the intervention works and why.[4] All seven studies are implementations, not just the latter six!

So, logical analysis does not yield any clear distinction between Implementation Research on the one hand and HS&DR on the other. The terms might denote some subtle shift of emphasis, but as a communication tool in a crowded lexicon, we think that Implementation Research is a term liable to sow confusion, rather than generate clarity.

Table 1

| Term |

Definitions |

Sources |

| Management research |

“…concentrates on the nature and consequences of managerial actions, often taking a critical edge, and covers any kind of organization, both public and private.” |

Easterby-Smith M, Thorpe R, Jackson P. Management Research. London: Sage, 2012. |

| Health Services Research (HSR) |

“…examines how people get access to health care, how much care costs, and what happens to patients as a result of this care.” |

Agency for Healthcare Research and Quality. What is AHRQ? [Online]. 2002. |

| HS&DR |

“…aims to produce rigorous and relevant evidence on the quality, access and organisation of health services, including costs and outcomes.” |

INVOLVE. National Institute for Health Research Health Services and Delivery Research (HS&DR) programme. [Online]. 2017. |

| Operations research |

“…applying advanced analytical methods to help make better decisions.” |

Warwick Business School. What is Operational Research? [Online]. 2017. |

| Patient safety research |

“…coordinated efforts to prevent harm, caused by the process of health care itself, from occurring to patients.” |

World Health Organization. Patient Safety. [Online]. 2017. |

| Comparative Effectiveness research |

“…designed to inform health-care decisions by providing evidence on the effectiveness, benefits, and harms of different treatment options.” |

Agency for Healthcare Research and Quality. What is Comparative Effectiveness Research. [Online]. 2017. |

| Implementation research |

“…the scientific inquiry into questions concerning implementation—the act of carrying an intention into effect, which in health research can be policies, programmes, or individual practices (collectively called interventions).” |

Peters DH, Adam T, Alonge O, Agyepong IA, Tran N. Implementation research: what it is and how to do it. BMJ. 2013; 347: f6753. |

We have ‘audited’ David Peters’ and colleagues BMJ article and found that every attribute they claim for Implementation Research applies equally well to HS&DR, as you can see in Table 2. However, this does not mean that we should abandon ‘Implementation Science’ – a set of ideas useful in designing an intervention. For example, stakeholders of all sorts should be involved in the design; barriers and facilitators should be identified; and so on. By analogy, I think Safety Research is a back-to-front term, but I applaud the tools and insights that ‘safety science’ provides.

Table 2

| Term |

| “…attempts to solve a wide range of implementation problems” |

| “…is the scientific inquiry into questions concerning implementation – the act of carrying an intention into effect, which in health research can be policies, programmes, or individual practices (…interventions).” |

| “…can consider any aspect of implementation, including the factors affecting implementation, the processes of implementation, and the results of implementation.” |

| “The intent is to understand what, why, and how interventions work in ‘real world’ settings and to test approaches to improve them.” |

| “…seeks to understand and work within real world conditions, rather than trying to control for these conditions or to remove their influence as causal effects.” |

| “…is especially concerned with the users of the research and not purely the production of knowledge.” |

| “…uses [implementation outcome variables] to assess how well implementation has occurred or to provide insights about how this contributes to one’s health status or other important health outcomes. |

| …needs to consider “factors that influence policy implementation (clarity of objectives, causal theory, implementing personnel, support of interest groups, and managerial authority and resources).” |

| “…takes a pragmatic approach, placing the research question (or implementation problem) as the starting point to inquiry; this then dictates the research methods and assumptions to be used.” |

| “…questions can cover a wide variety of topics and are frequently organised around theories of change or the type of research objective.” |

| “A wide range of qualitative and quantitative research methods can be used…” |

| “…is usefully defined as scientific inquiry into questions concerning implementation—the act of fulfilling or carrying out an intention.” |

— Richard Lilford, CLAHRC WM Director and Peter Chilton, Research Fellow

References:

- Lilford RJ, Chilton PJ, Hemming K, Girling AJ, Taylor CA, Barach P. Evaluating policy and service interventions: framework to guide selection and interpretation of study end points. BMJ. 2010; 341: c4413.

- Peters DH, Adam T, Alonge O, Agyepong IA, Tran N. Implementation research: what it is and how to do it. BMJ. 2013; 347: f6753.

- Pinnock H, Barwick M, Carpenter CR, et al. Standards for Reporting Implementation Studies (StaRI) Statement. BMJ. 2017; 356: i6795.

- Prost A, Colbourn T, Seward N, et al. Women’s groups practising participatory learning and action to improve maternal and newborn health in low-resource settings: a systematic review and meta-analysis. Lancet. 2013; 381: 1736-46.

*Naming of Parts by Henry Reed, which Ray Watson alerted us to:

Today we have naming of parts. Yesterday,

We had daily cleaning. And tomorrow morning,

We shall have what to do after firing. But to-day,

Today we have naming of parts. Japonica

Glistens like coral in all of the neighbouring gardens,

And today we have naming of parts.

…